Two Ways an AI Can Play Games

Number 2 will SHOCK you

I sense that most people don’t really know what “artificial intelligence” refers to. We hear that it’s transforming the economy, that it poses an existential risk, or that it sometimes sucks. I rarely understand what a given article means by “artificial intelligence,” and I wonder what the average reader pictures when they see the term. In the corporate world, companies employ the term loosely to prove to their shareholders, consumers, and applicant pool that they’re doing complicated, cutting-edge stuff. Oftentimes, I think companies just slap “and AI” after the term “machine learning” to cover their bases.

As AI discourse proliferates, I think some writers should explain what the heck AI even is. I don’t consider the topic too technical either. Physics and engineering are hard, but anyone with rudimentary coding skills can implement an AI for certain tasks. I’m not smart (unlike my readers, of course), so if I can understand it, others can as well. A lot of writers probably skip the details due to apathy. The guy writing the “AI in baseball” article probably likes baseball, not AI.

The common definitions either illuminate little or omit key distinctions. Here’s Microsoft’s try:

What is artificial intelligence (AI)?

Artificial intelligence is the capability of a computer system to mimic human cognitive functions such as learning and problem-solving. Through AI, a computer system uses math and logic to simulate the reasoning that people use to learn from new information and make decisions.

What is machine learning?

Machine learning is an application of AI. It’s the process of using mathematical models of data to help a computer learn without direct instruction. This enables a computer system to continue learning and improving on its own, based on experience.

I don’t imagine the average readers gains much from these definitions. Unless someone’s trying to create human brain from scratch, models only “mimic human cognition” in an extremely superficial sense. Meanwhile, Microsoft states that AI employs “math and "logic” to “simulate reasoning” while machine learning “us[es] mathematical models… to help a computer learn.” I can’t differentiate these two descriptions, and I wonder if the uninitiated can parse them at all.

These kinds of definitions could only enlighten someone who’s never heard the terms before. If an English language learner asked me what “AI” meant, I’d probably parrot Microsoft’s definition: it’s when technology mimics human behavior. That could help them recognize the equivalent term in their native tongue, but it wouldn’t provide much additional clarity.

With machine learning, I broke the definition into three parts and claimed that “machine learning” refers to any algorithm that does one of those three tasks. I didn’t attempt a simplified wholistic definition that encapsulates all three parts. If I did, I’d produce a quasi-meaningless one-liners like those seen in so many data science articles. For AI, I will also use a three category definition. AI refers to one of:

Machine learning

Traditional AI (Following a set of rules)

Modern AI (Guess and check)

Machine Learning

In many casess, the AI just refers to machine learning. The latest New York Times AI article discusses a model’s failure to recognize Native American cultural imagery. The piece mentions a image recognition, which sounds like a predictive model. I’ve mentioned a similar trend in the business world, where companies that boasted of their machine learning now claim to do machine learning and AI. The blogging and journalism world have followed, and now many articles about machine learning refer to it as AI.

Traditional AI

Traditional AIs replace a human by following a set of instructions from the programmers. Video game computer opponents, for instance, obey a set of instructions that attempts to somewhat mimic human strategy and dexterity. Some instructions are simple, such as an RPG boss randomly choosing between three attacks. Others use more complicated algorithms to act in a more intelligent manner. Either way, the traditional AI will not gain intelligence unless its creator changes the code.

Modern AI

Unlike traditional AI, modern AI contains little direct instruction from the programmer. The code won’t tell the AI to do X if its opponent does Y or Z if its opponent does W. Instead, the modern AI learns from continual success and failure. If an action leads to success, it will choose that action more frequently. Since it learns from play, a programmer can apply a modern AI to a task without a deep understanding of that task. I could make a modern AI for a new game, for example, without knowing the game’s strategies.

Explaining a topic requires specifics. Since I covered machine learning a couple months ago, I’ll only discuss two examples: traditional AI and modern AI. In both cases, I will discuss a board game application of the models, since that’s my only practical experience with AI.

Traditional AI: Minimax

Imagine a game with two potential moves per turn, each with a point value. On my turn, move one scores 50 points and move two scores 40. With this information alone, I would select move one. However, my opponent also wants to maximize his point point total. If I select move one, he has a choice between two moves: one that scores 10 and one that scores 80. If I choose move two, he has a choice between a move that grants 45 points and one that grants 55. The scenario therefore looks like this, with my moves in black squares and my opponent’s in blue ones:

If I only considered my turn, I would take the 50-point move over the 40-point one. However, if I take the 50 point one, my opponent will score 80, leaving me down 30. If I take 40, my opponent respond with is 55 points, leaving me down only 15. Thus, I would select move two.

An ideal model would scan all possible turns to find the best one. In practice, we run into hardware or time limits when we try to look many moves ahead. Chess, for example, boasts about 35 potential moves per turn. As such, we would need to scan 35 moves for our next turn, 35^2 for the turn after that, and 35^3 for the turn after that. In a program that takes 1 second to search 100 moves, we could scan one round in under a second, the next in 12 seconds, and the third in 7 minutes. This grows pretty fast, so checking 8 rounds ahead would take over 700 years. Traditional AI therefore faces a tradeoff between the computation time and playing ability. On the bright side, this creates a straightforward difficulty setting for an AI: the harder AIs will search more turns ahead.1

To implement minimax, we just need the point value for each game state and the model will calculate the best move. It’s that simple! Now, while you’re here, can give me the point value for this game state?

How about this one?

Or how about this one from a modern classic?

Oh, and we also need the point value for each potential opponent response. That’ll be due Thursday at midnight, and will account for 40% of your grade.

As with machine learning, the difficulty of AI stems not from implementing the algorithm but from understanding the problem. Even in simpler games, it’s hard to imagine a single equation that can evaluate every board state. In most games, it’s even hard to imagine that that data might look like. How does a computer “calculate” the value of a chess?

Data scientists prefer datasets that looks something like this one, from the popular “iris” dataset:

In a traditional dataset, each column represents a piece of information about an observation, known as a feature. A chess board, unfortunately, doesn’t offer a clear path to such a dataset. What would our columns contain?

To convert a game state to a dataset, we might consult a subject matter expert and ask them what they look for in a game of chess. As one obvious example, we could create an “opponent_has_queen” column. This column would take the value 1 in a game state where the opponent’s queen remains on the board, and 0 in a state where the opponent’s queen has been captured. We could also create position columns, like one that shows the number of pieces a user has within 3 spaces of our king. After creating the set of relevant features, we would need to assign a value for each one. Having a queen might be worth 10 points, while pieces within 3 spaces our king receives one point. A programmer could then adjust these values based on the AI’s performance against human opponents.

To recap, minimax requires a human to model the data, create a score, and update that score. You might think, wait a minute, don’t we like AI because it does things for us? What if I don’t know how to play Chess, Go, or that weird looking game with the circles and rings2? Couldn’t a computer just figure out how to play on its own? Well, you’re in luck: let’s introduce modern AI.

Modern AI: Monte Carlo Tree Search

Monte Carlo Basically Means Guessing a Lot

Before we discuss the “Tree Search” part, I should explain what “Monte Carlo” methods refer to. Imagine you need to find the area of an irregular shape like this:

We won’t find as formula for it in our algebra textbook, so we’re going to have to try something else. Let’s draw a square around it, and pretend that square measures 10m by 10m.

We can obtain the square’s area from our algebra textbook: it’s 100 square meters. From here, we could randomly select thousands of points in the square and check if they land inside the four-pointed star. If 20% of such points land inside the star, for instance, then we can estimate the area of the star to be 20 square meters.

More generally, Monte Carlo methods randomly sample data to solve problems with no formulaic solution. In simpler terms, we guess a bunch of times and see what works.

Applying this idea to a game, we can just try all possibilities and see which one leads to the highest likelihood of a win. This allows us to develop an optimal AI without understanding the strategy of a game.

Let’s reconsider our two-move game from above. Before, we needed to assign each move a point value. This time, we try both move a bunch of times. Each time we do, we simulate a random game from that move. After a large number of simulations, we then see which move generated more wins from the random simulation, and select that one for our turn.

Wait, Random Games?

You might be surprised that we play random games for each move. Could a random game provide meaningful information about the quality of a move? Theoretically, if a move confers an advantage, we should see it win more games even in random simulations. To simulate a more realistic game, we’d need to create an evaluation function for each game state, and then we’d return to the problems with minimax.

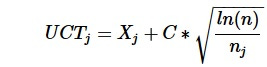

On the other hand, many games feature tons of potential moves (like the 35 in chess), and it would take a long time to run a bunch of simulations on each one. Unlike professional baseball players, our AI can’t spend 700 years between turns. If one move sucks, we need to ensure we don’t waste our time producing simulations from it. To solve this dilemma, we need a policy that tells us which move to simulate. If we simulate all moves evenly, we might waste a bunch of time simulating crappy moves that won’t help. If we try to hand-pick the best moves, we suffer the aforementioned minimax problems. To manage this tradeoff, we simulate moves using Upper Confidence Bounds for Trees (UCT). The formula for UCT looks like this:

I’ll break this down a bit:

On the left-and side, we have a given move’s simulation score (black box). Our algorithm will always simulate the move with the highest simulation score. That score is the sum of the winning percentage (red box) and exploration score (blue and brown boxes).

After simulating the game, our model will adjust the simulation score in two ways. One, it will update the winning percentage of the move (red box). Two, it will decrease the exploration value of the move (brown box). You can ignore the details of brown box formula for now. In essence, it’s really high for moves that haven’t been simulated much, and really low for moves that have been simulated quite a lot. This encourages the model to explore moves that haven’t been tried much, because, even if they have a lot winning percent, we might not have found their value yet. It discourages exploring moves we’ve tried a bunch of times, since we all ready know how good they are. Finally, C (blue box) contains a number we pull from our ass. If we prefer a more conservative model, we can increase the value of C. If we want our model to eliminate poor moves faster, we can decrease the value of C. If you have no clue which one you want, set it to the sqrt(2).

To simplify even more, we simulate the move with the highest simulation score, defined as

[simulation score] = [winning percentage] + [exploration value]

After we run our simulations for a pre-set amount of time, our AI chooses the move with the highest winning percentage.

Maybe not Random Games

Sometimes, we can’t trust the results of completely random games. In a large enough sample, these random simulations will find the best move. However, almost all real-life implementations face time constraints, so we might need to put our thumb on the scale.

Instead of using constant C for every move, we can create a predictive model that decreases C for moves that are less likely to win. We could lower C, for instance, in a chess move that puts the queen in danger. Yes, this creates the same problem discussed with minimax: it requires some manual work. Unlike minimax, however, our predictive model doesn’t need to be that good. It doesn’t need to accurately evaluate every game state on its own; it just needs to do so slightly better than random. That’s a much easier task (and a less computationally intensive one).

Humans Need Apply

In all cases, creating and optimizing the AI requires a lot of human judgement. I therefore remain a bit skeptical of claims that AI will replace or conquer humans. Without the humans, they do a stupid. On the other hand, I’m not familiar with the cutting edge research. These older models need human assistant, but maybe the new ones will soon automate every Substack blog on their own. I’m also not surprised to read articles like the New York Times one linked above. While I object to the term “bias” (it has a specific meaning in machine learning), I’m not surprised that an AI would reproduce human errors. There’s no magic; an AI will optimize the inputs provided by the humans.

When reading about Minimix, you might find the term “Alpha-Beta pruning.” This algorithm expedites the calculation, but, since it doesn’t change the logic, I don’t explain it here.

It’s called YINSH, and it’s great!

Well…Elon Musk said AI would overtake the human race by 2025 and is more dangerous than nukes. But then again, he said my in-laws would have Internet in rural Michigan by 2020, so…

Oh! Forgot to mention my favorite part! Had to re-read this before I got it:

"Unlike professional baseball players . . ."

LOL!